Layout-guided Indoor Panorama Inpainting with Plane-aware

Normalization

National Tsing Hua University

Material

National Tsing Hua University

We present an end-to-end deep learning framework for indoor panoramic image inpainting. Although previous inpainting methods have shown impressive performance on natural perspective images, most fail to handle panoramic images, particularly the indoor scenes, which usually contain complex structure and texture content. To achieve better inpainting quality, we propose to exploit both the global and local context of indoor panorama during the inpainting process. Specifically, we take the low-level layout edges estimated from input panorama as a prior to guide the inpainting model for recovering the global indoor structure. A plane-aware normalization module is employed to embed plane-wise style features derived from the layout into the generator, encouraging local texture restoration from adjacent room structures (e.g., ceiling, floor, and walls). Experimental results show that our work outperforms the current state-of-the-art methods on a public panoramic dataset quantitatively and qualitatively.

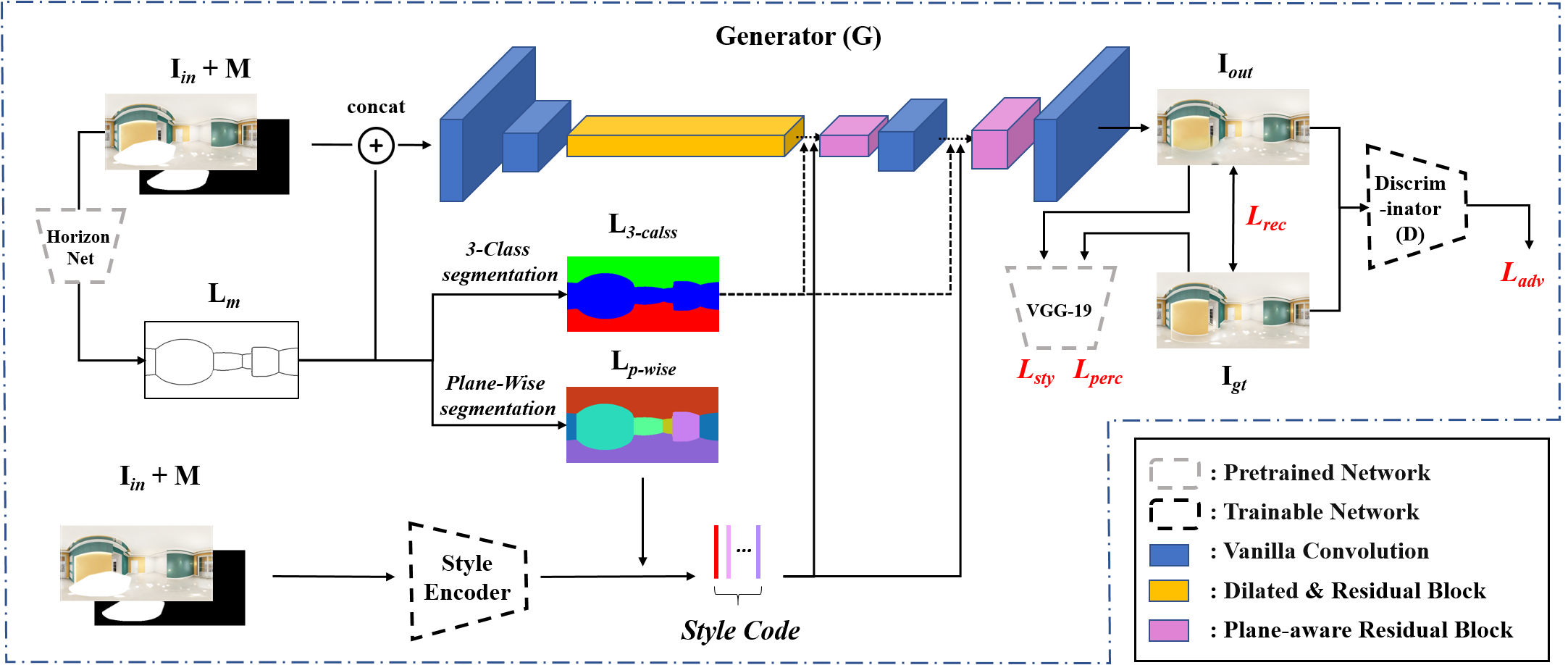

Architecture overview.Our network architecture follows the conventional generative adversarial network with an encoder-decoder scheme supervised by low and high-level loss functions and a discriminator. Given a masked indoor panoramic image Iin with a corresponding mask M, our system uses an off-the-shelf layout prediction network to predicts a layout map. The low-level boundary lines in Lm serve as a conditional input to our network to assist the inpainting. Then, we compute two semantic segmentation maps from the layout map Lm, declared L3-class and Lp-wise, where the latter is used to generate plane-wise style codes for ceiling, floor, and individual walls. Finally, these per plane style codes, together with L3-class, are fed to a structural plane-aware normalization module to constrain the inpainting.

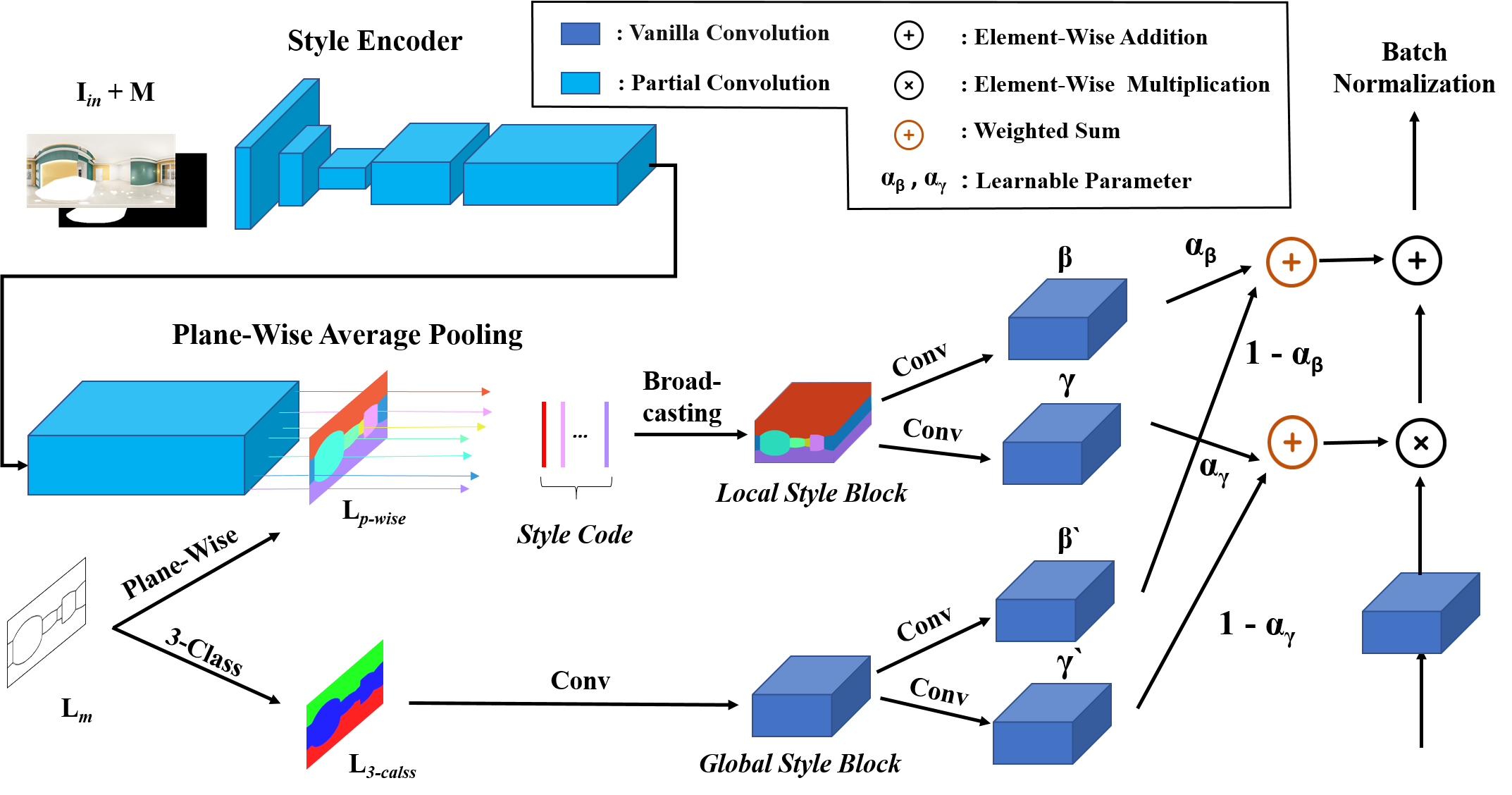

Plane-aware normalization.Given an incomplete indoor panoramic image Iin with mask M, we first predict two normalization values β and γ through several partial convolution blocks and a plane-wise average pooling based on the plane-wise segmentation map Lp-wise. Second, we predict another set of normalization values β' and γ' through several vanilla convolution blocks based on the 3-class segmentation map L3-class. The final normalization values are thus computed using the weighted sum weighted by learnable parameters αβ and αγ.